One of the more recent trends in IT is Serverless architecture. Like any hype in the earlier stages a lot of ambiguity exists on what it is and what problems it solves. Serverless architecture is no different. So recently a collegue of mine Jan van Zoggel (you can read his awesome blog here) and I took a look at what Serverless architecture is.

Some definitions, some ambiguity and some other terms

The Serverless trend emerged, like so many others these days, in the realm of web and app development. Where all logic and state traditionally handled by the backend was placed is a “*aas” (as a service) which got the term BaaS or mBaaS (mobile)Backend as a service.

Meanwhile Serverless also got to mean something slightly different, which of course got another “*aas” acronym, FaaS. Or Function as a Service. In FaaS certain application logic (Functions) run in ephemeral runtimes, in the cloud where the cloud provider in question handles all runtime specific configuration and setup. This includes stuff like networking, loadbalancing, scaling and so on. More on these emphemeral runtimes below.

Even though it is still quite early on in the world of Serverless architectures it seems the FaaS definition of Serverless architecture is gaining more traction than the mBaaS definition. This doesn’t mean mBaaS does not have a valid right of existence, just that it’s association with Serverless architecture is faining away.

To return to the definition of Serverless architecture and FaaS, it seems that the abstraction of (all) runtime configuration, setup and complexity is what Serverless is all about. Off course it is not truly serverless. Your stuff has to run somewhere

With this abstraction in mind I find the definition by Justin Isaf the most to the point when he said:

“Abstracting the server away from the developer. Not removing the server itself”

You can find his presentation about Serverless on YouTube: https://www.youtube.com/watch?v=5-V8DKPsUoU

The evolution of runtimes

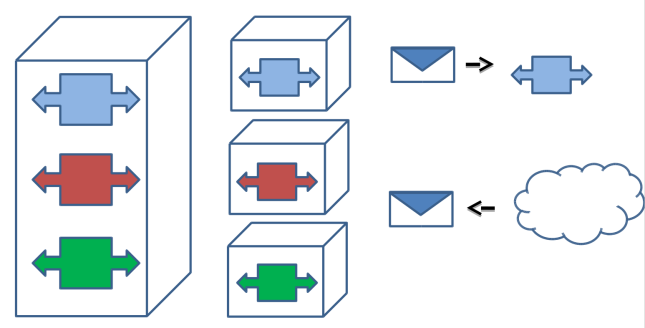

When looking not just at Serverless architecture, but also other fairly recent trends in IT, we’re looking at you Cloud, Microservices and Containerization. We can detect a “evolution of runtimes”.

From one to many runtimes.

Traditionally you had one gigantic server, virtualized or bare metal where an application server of some sort was installed to. And on this application server all applications, modules and or components of a system would be deployed to and run. Even though these application servers where usually setup in multiples to at least have some high availability further scaling and moving around these often called monolithic runtimes was hard at best and downright impossible at worst (looking at you mainframe!).

Recently a trend emerged to not only split complex monolithic systems is autonomous modules, called microservices, but also to separate these microservices further by running them in a separate runtime. Usually a container of some sort. Thow in some container orchestration tool like Kubernetes, or Docker Swarm and all of a sudden scaling out and moving around runtimes becomes much much simpler. Every application is neatly separated by others and specific requests or messages for a particular app are handled by its runtime (container).

The next step in this evolution is what Serverless architecture and FaaS is all about. When a container runtime handles all requests of the app it is serving a FaaS function or app runtime handles only one request and turns itself off after the function completes. This turning off after every invocation has a couple of characteristics which defines a Serverless architecture.

- No “Always on” server – when no invocation requests are being handled and a traditional runtime would be sitting idle a Serverless architecture simply has nothing running.

- “Infinite” scalability – since every request is handled by it’s seperate runtime and the provisioning and running of these FaaS functions is handled by the (Cloud) provider it provides a theoretically infinitely scalable application.

- Zero runtime configuration – the configuration of your application server, docker container or server is completely left to the (cloud) provider. Providing a “No-Ops” environment where the team only has to worry about the application logic itself.

The evolution of runtimes

You can compare it with modern cars which turn off the engine while waiting for a traffic light. When the light turns green and the engine has to perform it starts. When the car is idling it simply turns off the engine completely.

Since every request is handled by its own runtime scalability is handled out of the box.

Commercial offerings

As of the writing of this blog the three largest commercial offerings for implementing a Serverless architecture are available from, not surprisingly, the “big three” of cloud providers.

Not suprisingly the specific Serverless offering is completely integrated with all other cloud offerings of the particular provider. Meaning you can invoke your FaaS function from various triggers provided by other cloud solutions.

Some final thoughts

Even though it’s pretty early in the hype cycle Serverless architecture and FaaS definitely have some attractive characteristics. The potential of Serverless functions is very great, basically all short running stateless transactions can be handled by a FaaS functions. And when combined with other cloud offerings like storage and API Gateways even more complex applications can be created with Serverless architecture. With the cloud native and scalability of FaaS your application is completely ready for the 21st century, and off course buzzword complient