In this blog series we’re installing Openshift Community Edition (known as OKD, I don’t get it either) on AWS instances based on Centos. In the previous blog post, we’ve set up the AWS instance and used several steps to preconfigure the host. In this part we’ll run the actual Openshift installation using Ansible. To verify that the domain is running, we’ll deploy and scale a simple web application using the web console.

In this blog post Jan van Zoggel and I focused on installing an all-in-one OKD cluster on a single AWS instance and expanding the cluster with a second AWS instance and manually expanding the cluster with a second AWS instance. This scenario was used for the Terra10 playground environment and this guide should be used as such.

The first step towards installing the Openshift cluster is grabbing all the install scripts. Because OKD is open source, you can simply grab these from the official Github page. We’re going to put them in /home/centos/openshift-ansible:

cd ~ git clone https://github.com/openshift/openshift-ansible cd openshift-ansible git checkout release-3.11

It’s important to checkout the branch of the version you wish to install, otherwise you’re using the scripts that are currently in development and you’re likely to run into some bugs. We’re using OKD 3.11 which is the latest release at this time (based on Kubernetes 1.11) but if you want to install a different version, just checkout that branch.

Ansible uses several playbooks to install your (single node) cluster, but it also requires an inventory file which contains all your desired hosts and settings. Creating the inventory file can be a bit challenging because there are a lot of variables to choose from. Although there are many online examples, you should be cautious because the available variables change with each OKD release. For example, to set a node as an ‘infra’ node you had to set the ‘region’ as ‘infra’ using the ‘openshift_node_labels’ variable. In the latest release this has changed completely, as you now have to use the ‘openshift_node_group_name’ variable and set it to ‘node-config-infra’. You can find the latest list of variables here, and the blogposts still help to determine some example values.

I’ve used the following inventory file to create the single node domain, and we’ll go through each of these values in more detail below. Replace the file in /etc/ansible/hosts with the following:

# Create an OSEv3 group that contains the masters, nodes, and etcd groups

[OSEv3:children]

masters

nodes

etcd

# Set variables common for all OSEv3 hosts

[OSEv3:vars]

ansible_ssh_user=centos

ansible_become=yes

openshift_deployment_type=origin

# using htpassword authentication on master

openshift_master_identity_providers=[{'name': 'htpasswd_auth', 'login': 'true', 'challenge': 'true', 'kind': 'HTPasswdPasswordIdentityProvider'}]

# disable memory checks on the EC2 host

openshift_disable_check=memory_availability,disk_availability

# set the default subdomain

openshift_master_default_subdomain=apps.brewery.terrax.io

# host group for masters

[masters]

brewery.terrax.io openshift_public_hostname=brewery.terrax.io

# host group for etcd

[etcd]

brewery.terrax.io

# host group for nodes, includes role info

[nodes]

brewery.terrax.io openshift_node_group_name='node-config-all-in-one'

Although you can set many more variables, these covers the basics. The file is divided in several sections, as indicated by the brackets for each section. Let’s start at the OSEv3:vars section.

Ansible_ssh_user is set to either root or a user that belongs to the wheel group, in case of our AWS image we use ‘centos’ as this user is allowed to execute everything out of the box. When you set the ansible_become variable, you tell Ansible that it needs to use ‘sudo’ to run commands. Openshift_deployment_type is set to Origin, as we use the open source version of Openshift.

In our playground environment we’re not going to fuss around with LDAP, so we’d like openshift to authenticate against a local htpasswd user. Simply set the openshift_master_identity_providers variable to the specified value, we’ll add the actual user after the installation. Next, we use openshift_disable_check to prevent Ansible to check the memory and disks requirements. This can be useful if you chose the t3.medium instead of the t3.xlarge for budget reasons. The final variable in this section is openshift_master_default_subdomain, which tells Kubernetes that apps run at [projectname]-[appname].apps.brewery.terrax.io. It’s very important that this value matches the wildcard cname you used in the DNS configuration in part 1.

The masters section lists all AWS instances used for the master role in your Openshift cluster. Note that you can set up multiple masters (at least 3 for High Availability), but this involves setting up several other variables and a loadbalancer host. Note that this variable also contains the openshift_public_hostname attribute, which matches the other cname you’ve set up in the DNS at part 1.

The etcd section in this case points to our master host, as it’s common to co-locate our etcd with our masters. At the ‘node’ section, you specify all your nodes (masters, infra nodes and compute nodes combined) and you use the openshift_node_group_name attribute to specify whether a node has a master, infra, compute or all-in-one role. As we only have one EC2 instance, we’ll pick the latter.

Once you’ve got the inventory file set up, you can start with the prerequisite Ansible playbook. This playbook checks if your config file makes sense and checks the hosts in the files to verify that all required components are set.

cd ~/openshift-ansible ansible-playbook ./playbooks/prerequisites.yml

Ansible will look at /etc/ansible/hosts by default, use -i to specify another location. Don’t worry if you hit an error while running the playbook, the prerequisite playbook (and the installation playbook to some extend) is rerunnable so you can modify your inventory file and repeat the playbook until you hit the desired result.

The prerequisite playbook should verify that all hostnames and URLs in your inventory are resolvable but be sure that it uses the DNS to achieve this! If you put hostnames in ‘/etc/hosts’ you’ll fool the Ansible scripts, but /etc/resolve.conf is copied inside the containers. As a result, the containers will be unaware of the hostnames and this will cause a fatal error in the installation part. If you’ve got all cnames in the DNS as described in part 1, you should be fine.

If your prerequisite completes you can run the installation playbook:

ansible-playbook ./playbooks/deploy_cluster.yml

This may take a while to complete, as Ansible will now install Kubernetes, create an internal registry, set up multiple internal pods and you’ll even get Prometheus and Grafana monitoring out of the box.

Once you’re done, verify that your ec2 instance is now running as an Openshift node with access to the API by typing the following command:

oc get nodes

This output shows you a couple of things, mainly that:

- The oc (or kubectl) command now logs in to your master and can be used to access the cluster.

- Your node has status ‘Ready’ and owns the compute, infra and master roles.

Before we can open a browser and access the webconsole, we need to set up the user to login to the cluster. For this playground environment, we’ve specified the htpasswd_auth in the inventory file. Simply set up the admin user like this:

sudo htpasswd -c /etc/origin/master/htpasswd admin

You’ll be prompted to insert a new password. Although this is just a playground, please pick a strong password as this is used to access your public web console. After that, just head over to your web console using the URL you’ve set up in the inventory file! In the example above, this would be:

https://brewery.terrax.io:8443/console

You might hit a certificate error as you haven’t set up certificates yet for this domain. Just ignore the alert and head over to the service catalog.

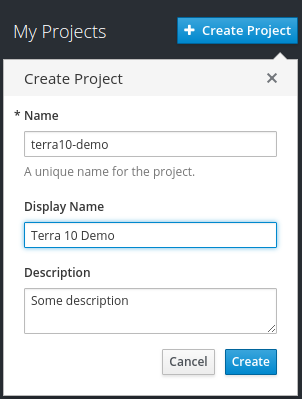

In here, we’re going to create our first project. In the upper right corner, press the blue ‘Create Project’ button and enter your project name. Hit the Create button when you’re done.

Next, click your project from the right pane and you’ll automatically go to the dashboard page of your new project. Hit the blue ‘Browse Catalog’ button to add a template.

Pick ‘Nginx HTTP server and reverse proxy’ from the catalog. In the dialog screen, click Next. As project name, we’ll use ‘beerdecision’. You can set a lot of settings in this page, but for now we only focus on the Git Repository URL and the Context Directory. Point the Git Repository to:

https://github.com/mhjmaas/beerdecision.git

and set your Context Directory to:

public

That’s all we need, hit next and ignore the binding for now. As you hit ‘Create’, Openshift will connect to Github and the source code for a small React web application is downloaded into a new Nginx container. This might take a few seconds.

It won’t take long before you see your Pod appear. The blue circle indicates that the Nginx container is ready to handle the traffic. You can use the up and down arrows to increase or decrease the amount of containers Openshift uses to handle the traffic.

If you’ve used the examples in this guide, your React app should now be available on the following endpoint:

http://beerdecision-terra10-demo.apps.brewery.terrax.io

The app will help you decide what beer to drink! You can add options and the random selection will change as well.

Pretty cool right? But what if the Git code changes? If the developer decides to make a code change, you can simply hit the ‘start build’ button using the hamburger icon on the right and Openshift will download the latest code from Github into a second Nginx image. Next, all traffic will automatically move to the new pod without downtime for the app users!

So that’s it for this section on the installation of Openshift. We’ve set up a single node cluster on our AWS instance and we’ve even deployed our very first code into a project. In the last section we’re going to add a second AWS instance and we’ll set it up as a compute node to handle all application pods. Stay tuned for part 3!

A special thanks to Marcel Maas for providing the React Git repository for this guide.

Rubix is a Red Hat Advanced Business Partner & AWS Standard Consulting Partner