Openshift is gaining in popularity, judged by the increasing number of large companies considering Openshift Enterprise as their new container platform. Even though Red Hat offers Openshift as a cloud service, and you can also install Openshift Enterprise on-premise on RedHat Enterprise Linux 7, for this blog post I decided to get my hands dirty with the open source side of the platform. As this guide will show, you can easily enjoy the power of the container platform without any servers or license subscriptions of your own. In a series of three blog posts, we’ll look at installing the open source edition of Openshift, also known as OKD. As open source Linux we picked Centos 7, which is similar to RHEL7.To sweeten the deal, we’ll use the AWS cloud to scale our platform.

In this blog post Jan van Zoggel and I focused on installing an all-in-one OKD cluster on a single AWS instance and expanding the cluster with a second AWS instance manually. This scenario was used for the Terra10 playground environment and this guide should be used as such.

As most readers already know, containers changed the way we release and run applications. There are several benefits to running your applications in containers, but managing and scaling them can be difficult as the number of containers and the amount of docker images grows. Openshift tries to manage these containers with Kubernetes (which is called K8s by the cool kids): the container management layer based on Google’s design. On top of that, Openshift adds several tools such as a build-in CI/CD mechanism, Prometheus monitoring and a nice webconsole if you’re not a fan of the build-in CLI.

For developers that want the basic Openshift experience without the hassle of installing an entire cluster, you can easily install minishift (or minikube if you want to stick to K8s only). In fact, we’ve got some labs to get you started. However, if you’re interested in the installation on multiple hosts so you can play around with High Availability (and you don’t mind diving into the various infrastructure challenges) you’ll have to do an installation.

In blog part 1, we’ll briefly look at setting up AWS instance with Centos 7. Also we’ll look at installing the appropriate packages, configuring Docker Storage, setting up SSH keypairs and setting up the DNS configuration using AWS Route53.

In part 2, we’ll create an inventory file and run the “prerequisite” and “install” Ansible playbooks to set up an all-in-one singlenode cluster. Next, we create a simple user and test with a basic project.

Part 3 of the blogseries will show you how to (manually) configure a second AWS instance, scale out the cluster using a playbook, and move your pods to this second host without any network or DNS changes. (Using the AWS Scaling Group would allow you to add more hosts to your cluster as your load grows, but this is out of the scope of this blog.)

Let’s get started!

Setting up an AWS instance

Creating an instance in AWS is easy. We could use AWS CloudFormation to set up everything as Infra-as-Code but for now we learn more by doing it manually. So just hit the ‘launch instance’ button in the AWS console (assuming you have everything set up), search for Centos in the AWS Market place and choose CentOS 7 from the list. Note that you can also use a RHEL 7 image for this, but additional charges will apply. The official requirements for masters and nodes are pretty high, but you can override the check on this in the installation as we’ll describe in Part 2. For our playground we used t3.medium despite the 4-core minimum warning for co-located etcd and which might also cause some memory-related performance issues. Use t3.xlarge if you want to meet the official requirements.

In the instance detail screen, select a subnet from the dropdown (as your other future instances will have to run in the same subnet) and make sure you have auto-assign Public IP turned ON. All the other settings are adjustable to your own AWS preference and spending budget, but keep in mind to set a primary IP in the subnet range at ‘eth0’ below. This is just an internal IP used by AWS instances to talk to any future instances you might want to add, as you’ll see in Part 3.

In the ‘Add Storage’ section you get 8gb by default for the root filesystem, though 40gb is required as /var/lib will grow a lot. Also, you should add a second block device with 50gb diskspace for docker storage. In step 6 you can create a security group, default port 22 is enabled for SSH. For security reasons you can select ‘My IP’ in the source dropdown, this way only ssh connections from your current location are allowed. To enable communication with future AWS instances you can add all documented ports to this security group. Note that it’s best practice to use the internal subnet you defined at eth0 as the ‘source’ for these.

When you hit the ‘launch’ button at the end of the wizard you’ll get a PEM key which you can use to connect to your new created instance. Keep this file in a safe spot, as anyone can use it to connect to your instance! After a while you’ll see the public DNS entry appear at your EC2 instance. You’ll need this for the DNS setup. Please note that this DNS entry will change if you shut down your instance.

Setting up the DNS

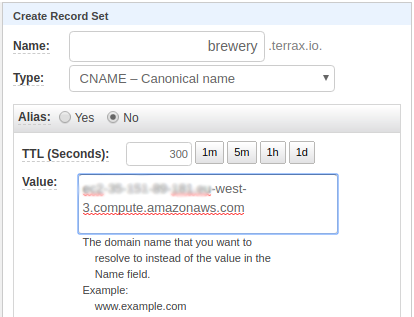

In this scenario we have one single node to handle all our traffic so this makes our DNS setup fairly easy. In AWS Console, go to Route 53 and set up a hosted zone (e.g. terrax.io). In this hosted zone, add two cname records:

- ‘brewery’ (which points to your public DNS entry in the paragraph above)

- ‘*.apps.brewery’ (which in this case points to the same public DNS entry as ‘brewery’)

Using the above example cnames, your webconsole will be available at: https://brewery.terrax.io:8443/console

and your pods will run at

[projectname]-[appname].apps.brewery.terrax.io

Setting up the packages on the host

Next, log on to the host using your new SSH key. On Linux and MacOS you can simply run ssh -i [your-key-file] centos@brewery.terrax.io. Note that the centos user comes out of the box when picking the centos image from the AWS marketplace.

Once you’re logged in, run the following commands:

sudo yum -y install centos-release-openshift-origin etcd wget git net-tools bind-utils iptables-services bridge-utils bash-completion origin-clients yum-utils kexec-tools sos psacct lvm2 NetworkManager docker-1.13.1

These will install all required packages on Centos which are mentioned on the prerequisites page of OKD, as well as several missing packages on the Centos 7 image of AWS. NetworkManager should be enabled to be available after reboot:

sudo systemctl enable NetworkManager

Setting up Docker

We installed Docker, but to set up the second disk as docker storage device we need to first find the block device name using lsblk:

[centos@brewery ~]$ lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT nvme0n1 259:0 0 40G 0 disk └─nvme0n1p1 259:2 0 40G 0 part / nvme1n1 259:1 0 50G 0 disk

As the example shows, the empty block device is called ‘nvme1n1’, so we can add easily add it to the Docker storage configuration file:

sudo cat < /etc/sysconfig/docker-storage-setup DEVS=/dev/nvme1n1 VG=docker-vg EOF

Next, run the Docker storage setup. This will use the disk you’ve added in the ‘DEVS’ section to create a volume group called ‘docker-vg’:

sudo docker-storage-setup

You can verify that the volume group was created by running ‘sudo vgdisplay’.

Docker is almost ready, we just need to add the following part to the ‘OPTIONS’ line in the /etc/sysconfig/docker file:

--insecure-registry=172.30.0.0/16

For your final step, make sure Docker starts at boot time:

sudo systemctl enable docker

Setup and prepare Ansible

Ansible is not available in the default repository of Centos, so we need to add the EPEL repository. EPEL is short for ‘Extra Packages for Enterprise Linux’ and we can easily add this to Centos with a single command:

sudo yum -y install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

However, we don’t want to use EPEL for all packages, so we’re going to disable the repo file. For the installation of Ansible, we explicitly state that we need EPEL for this package:

sudo sed -i -e "s/^enabled=1/enabled=0/" /etc/yum.repos.d/epel.repo sudo yum -y --enablerepo=epel install ansible pyOpenSSL

Generating a SSH-keypair

Ansible uses SSH to connect to all hosts in your inventory file, even if you’re only using a single host. To achieve this, you’ll have to set up a SSH-keypair for the centos user so login works without passwords. You already have a SSH-keypair from AWS, but it’s not recommended that you use this! Placing your private (generic) AWS keys on multiple locations is a security hazard. And besides, setting up a separate SSH-keypair is easy:

ssh-keygen -b 4096

Accept all defaults and you’ll find the id_rsa and id_rsa.pub files in the /home/centos/.ssh folder. Next, add the id_rsa.pub to authorized_keys:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

You can try if it works by trying to connect without a password:

ssh $(hostname)

That’s it for the preconfiguration! In the next part of this blog series, we’ll use this host to run the appropriate Ansible scripts. Stay tuned for Part 2!

Rubix is a Red Hat Advanced Business Partner & AWS Standard Consulting Partner