JBoss Fuse 6.2 is just release and one of the new exiting features is the new, graphical, data transformation component. And although still in tech preview phase I got curious and started to play with it. Now there are already some good tutorials available. For example this one: https://vimeo.com/131250890

After tinkering around a bit with the new feature I found some peculiarities which I will share here.

Project setup

I decided to go with a similar setup as in the tutorial described above. Transforming a xml document into a JSON document.

The xsd for the xml document I used looks like this:

<?xml version="1.0" encoding="UTF-8"?> <xs:schema attributeFormDefault="unqualified" elementFormDefault="qualified" xmlns:xs="http://www.w3.org/2001/XMLSchema"> <xs:element name="Person"> <xs:complexType> <xs:sequence> <xs:element name="PersonDetails"> <xs:complexType> <xs:sequence> <xs:element type="xs:string" name="firstName"/> <xs:element type="xs:string" name="lastName"/> <xs:element type="xs:integer" name="age"/> </xs:sequence> </xs:complexType> </xs:element> <xs:element name="Addresses"> <xs:complexType> <xs:sequence> <xs:element name="Address" minOccurs="0" maxOccurs="unbounded"> <xs:complexType> <xs:sequence> <xs:element type="xs:string" name="street"/> <xs:element type="xs:string" name="city"/> <xs:element type="xs:string" name="country"/> </xs:sequence> </xs:complexType> </xs:element> </xs:sequence> </xs:complexType> </xs:element> </xs:sequence> </xs:complexType> </xs:element> </xs:schema>

The JSON sample doc looks like this:

{“name”:”dummyName”,

“sirName”:”dummySirName”,

“age”:”32″,

“addresses”:[

{“streetname”:”dummyStreet”,”cityname”:”dummyCity”,”countryname”:”dummyCountry”},

{“streetname”:”dummyStreet2″,”cityname”:”dummyCity2″,”countryname”:”dummyCountry2″}

]}

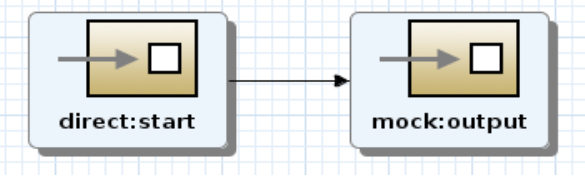

Since we are concentrating on the data transformation the rest of the project setup is not really all that important. I initially created a pass-through route with a direct and a mock endpoint:

When creating a new data transformation drag and drop the “Data Transformation” component from the palette to the canvas.

When creating a new data transformation drag and drop the “Data Transformation” component from the palette to the canvas.

This will bring up the wizard for setting up the data transformation.

This will bring up the wizard for setting up the data transformation.

The “Transformation ID” field is a unique identifier for the transformation, similar to the id field in a bean defined in the OSGi Blueprint configuration. The “Source Type” and “Target Type” drop downs allow you to select the source and target specifications.

The “Transformation ID” field is a unique identifier for the transformation, similar to the id field in a bean defined in the OSGi Blueprint configuration. The “Source Type” and “Target Type” drop downs allow you to select the source and target specifications.

The choices in the drop down are Java, XML, JSON and other. Other basically means you have to generate/create your own java type representation of the message format. For example using Bindy or BeanIO for CSV messages. Using the XML and JSON options will generate the java object for you on the fly, which is very handy.

The choices in the drop down are Java, XML, JSON and other. Other basically means you have to generate/create your own java type representation of the message format. For example using Bindy or BeanIO for CSV messages. Using the XML and JSON options will generate the java object for you on the fly, which is very handy.

When selecting XML as the source and JSON as the Target type and click next the next screen is the xml type window.

Here we can select our XML schema as source file and select Person as root element. A preview is displayed in the “XML Structure Preview” window.

Here we can select our XML schema as source file and select Person as root element. A preview is displayed in the “XML Structure Preview” window.

Clicking “next” we move on to the JSON type window.

Clicking “next” we move on to the JSON type window.

When clicking “Finish” the graphical Data Transformation screen is displayed and we can begin mapping the fields.

To map the fields simply drag the source field to the required target field.

Note, since we have repeating groups in both the xml and JSON messages we also need to drag and drop address to addresses. The complete mapping looks like this:

Now we have to wire the components in the route together to finish things up.

Peaking under the covers:

When switching to the source mode of the Blueprint configuration we can see what the wizard has setup:

<?xml version="1.0" encoding="UTF-8"?> <blueprint xmlns="http://www.osgi.org/xmlns/blueprint/v1.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation=" http://www.osgi.org/xmlns/blueprint/v1.0.0 http://www.osgi.org/xmlns/blueprint/v1.0.0/blueprint.xsd http://camel.apache.org/schema/blueprint http://camel.apache.org/schema/blueprint/camel-blueprint.xsd"> <camelContext xmlns="http://camel.apache.org/schema/blueprint"> <endpoint uri="dozer:myfirstdatatransform?sourceModel=persons.Person&targetModel=personsjson.Personsjson&marshalId=transform-json&unmarshalId=persons&mappingFile=transformation.xml" id="myfirstdatatransform"/> <dataFormats> <jaxb contextPath="persons" id="persons"/> <json library="Jackson" id="transform-json"/> </dataFormats> <route> <from uri="direct:start"/> <to ref="myfirstdatatransform"/> <to uri="mock:output"/> </route> </camelContext> </blueprint>

As we can see an endpoint has been created and configured with the Dozer component. Also two data formats have been created representing the xml schema and JSON documents respectively.

When looking at the project explorer we can also see the generated Java classes:

Testing the transformation

Now that our transformation is finished we need to test it. Luckilly Fuse provides the generation of a unit test for data transformations:

In the wizard simply point to the Blueprint containing the data transformation and the data transformation file and hit finish:

In the wizard simply point to the Blueprint containing the data transformation and the data transformation file and hit finish:

This generates a Camel Junit test, containing a stub Camel route, a producertemplate and a helper method for reading files (for feeding the producertemplate). The generated test class looks like this:

This generates a Camel Junit test, containing a stub Camel route, a producertemplate and a helper method for reading files (for feeding the producertemplate). The generated test class looks like this:

package nl.rubix.datatransformation.datatransformationtest;

import java.io.FileInputStream;

import org.apache.camel.EndpointInject;

import org.apache.camel.Produce;

import org.apache.camel.ProducerTemplate;

import org.apache.camel.builder.RouteBuilder;

import org.apache.camel.component.mock.MockEndpoint;

import org.apache.camel.test.blueprint.CamelBlueprintTestSupport;

import org.junit.Test;

public class TransformationTest extends CamelBlueprintTestSupport {

@EndpointInject(uri = "mock:myfirstdatatransform-test-output")

private MockEndpoint resultEndpoint;

@Produce(uri = "direct:myfirstdatatransform-test-input")

private ProducerTemplate startEndpoint;

@Test

public void transform() throws Exception {

}

@Override

protected RouteBuilder createRouteBuilder() throws Exception {

return new RouteBuilder() {

public void configure() throws Exception {

from("direct:myfirstdatatransform-test-input")

.log("Before transformation:n ${body}")

.to("ref:myfirstdatatransform")

.log("After transformation:n ${body}")

.to("mock:myfirstdatatransform-test-output");

}

};

}

@Override

protected String getBlueprintDescriptor() {

return "OSGI-INF/blueprint/blueprint.xml";

}

private String readFile(String filePath) throws Exception {

String content;

FileInputStream fis = new FileInputStream(filePath);

try {

content = createCamelContext().getTypeConverter().convertTo(String.class, fis);

} finally {

fis.close();

}

return content;

}

}

To complete our test just fill in our test method and we’re good to go. For this example we are just going to feed the producertemplate with a sample document and check the log. Normally you would create a more detailed unit test containing assertions and what not.

Our filled in test method looks like this:

@Test

public void transform() throws Exception {

startEndpoint.sendBody("direct:myfirstdatatransform-test-input", readFile("src/test/resources/dummyInput.xml"));

}

Now run it as a Junit test and inspect the log.

When inspecting the log we can see our transformation working and producing the following JSON document:

{“name”:”testFirstName”,”sirName”:”testLastName”,”age”:”32″,”addresses”:[{“streetname”:”teststreet”,”cityname”:”testcity”,”countryname”:”testcountry”},{“streetname”:”teststreet2″,”cityname”:”testcity2″,”countryname”:”testcountry2″}]}

A simple use case and a gotcha

Now above we created one of the simplest transformations imaginable. A common use case and still pretty straightforward is performing some filter action inside a loop. In this example we want to filter out some addresses in our transformation. As an example we only want to transform the address with “testcountry2” and ignore all others. A real life scenario would for example filter all billing addresses from postal addresses.

The data transformation offers next to the basic field mapping we’ve looked at previously also a couple of other options.

When selecting the mapping we want add the filter (address -> addresses) we can click to get the extra options:

The “Set field” option is just what we already performed to select a target and source field for the transformation. The “Set variable” allows us to use a variable we can define on the variable tab on the source pane. The “Set expression” allows us to leverage on of the Camel supported expression languages to perform some data manipulation. And finally the “Add custom function” allows us to create a Java helper method so some custom transformation logic can be implemented.

Since using XML as source for the data transformation, I initially tried to create an xpath expression performing the filtering. After all filtering in xpath is quite easy to do. However here I experienced some behaviour I did not expect.

In the menu above select “Set expression”, a new window pops up:

I selected xpath and entered the following expression:

/Person/Addresses/Address[country = “testcountry2”]

However, when observing the output log I encountered the following output:

{“name”:”testFirstName”,”sirName”:”testLastName”,”age”:”32″,”addresses”:[“<?xml version=”1.0″ encoding=”UTF-16″?>n<Address>n <street>teststreet2</street>n <city>testcity2</city>n <country>testcountry2</country>n </Address>”]}

Basically, two things have happened, first the filtering is performed correctly, but the output of the filter is “paste” into the target field. This is also mentioned in the tutorial I linked to above, but this does mean this simple use case is not supported using expressions. This also seems a bit unintuitive. To implement the filter use case I ended up creating a custom function.

After removing the xpath, by drag and drop the address field again. In the menu presented in the last screenshot select “Add custom function”. The following wizard pops up:

Click the “C” button to create a new class. In the screen that follows, select a Java package, enter a Class name and select the Return type and Parameter type.

The following class is generated:

package nl.rubix.datatransformation.datatransformationtest;

public class FilterTest {

public java.util.List< ? > map(java.util.List< ? > input) {

return null;

}

}

Luckily we can use the new Java 8 collection types and lambda expressions which eases the implementation of our filtering. The finished FilterTest class looks like this:

package nl.rubix.datatransformation.datatransformationtest;

import java.util.ArrayList;

import java.util.List;

import java.util.stream.Collectors;

import persons.Person.Addresses.Address;

public class FilterTest {

public ArrayList<personsjson.Address> map(ArrayList<Address> input) {

List<personsjson.Address> filteredAddress = input.stream().filter(c -> c.getCountry().equals("testcountry2")).map(Address -> createAddress(Address)).collect(Collectors.toList());

return (ArrayList<personsjson.Address>) filteredAddress;

}

private personsjson.Address createAddress(Address personAddress){

personsjson.Address address = new personsjson.Address();

address.setCityname(personAddress.getCity());

address.setCountryname(personAddress.getCountry());

address.setCountryname(personAddress.getCountry());

return address;

}

}

Now when we run the unit test and observe the logging we can see the correctly formatted JSON.

{“name”:”testFirstName”,”sirName”:”testLastName”,”age”:”32″,”addresses”:[{“cityname”:”testcity2″,”countryname”:”testcountry2″}]}

Although the data transformation functionality is very nice, I am a bit disappointed that for such a simple and common use case as filtering a custom Java class has to be created. This will probably mean for most data transformation we still cannot eliminate custom code. Something a graphical data transformation tool should aim at. But in all fairness the data transformation is still in tech-preview phase so who knows how it will look like and perform when it is officially supported.