In our last couple of beer blogs we stuck to a plain Docker setup. That already got our business a lot more professional and it enabled us to ship out beers on a far higher scale. But, we could always use another boost in our sales! High availability and scalability is what we need. There’s always some last minute Oktoberfest or beer festival going on these days, so we need ways to scale up our production to meet increasing demands and ways to scale down quickly again when the festival people need to recuperate from too much alcohol intakes.

We’ve already gotten our software setup down to containers. What’s the next step?! Kubernetes, of course!! In this first part of a blog series on Kubernetes we’ll start with the mother of it all, i.e. the Google Kubernetes Engine (GKE). We’ll show you how to setup a simple and cheap cluster and run a setup pretty similar to the one in our previous two posts, i.e. a load balancer serving a Spring Boot cluster of REST services talking to a MySQL database, all running on GKE.

Getting ready

Google Cloud Platform

First things first! You’ll need some tools of the trade on your development machine. Make sure you have a Google account setup for a free Cloud trial. And, while you’re at it, create a project in the Google Cloud Platform to use for your first Kubernetes Cluster.

Next step is to install the Google Cloud SDK on your machine. This quickstart shows you how to do it on Ubuntu. If, at anytime, you need to check your current Google Cloud SDK configuration, eg. to check which project is currently configured, you can issue the following statement:

|

1

|

gcloud config list |

To be able to build your Kubernetes cluster make sure you have the following apis enabled in your project:

- compute engine api

- kubernetes engine api

For more information on how to check and enable APIs go to this Google Cloud Platform page.

Kubectl

Finally, make sure to install the Kubernetes command-line tool kubectl. You can use this guide to do it.

Provisioning the cluster

With the help of the Google Cloud SDK let’s create our first small Kubernetes Cluster:

|

1

|

gcloud container clusters create terrax --num-nodes 3 --machine-type g1-small |

This will provision the cluster (this can take a few minutes), grant kubectl access to that cluster and, last but not least, it updates the current context of kubectl with that specific cluster.

You can also create the cluster via the Google Cloud Platform console, but then you need to perform these last two steps (granting access to the cluster to kubectl and setting the cluster in the current context manually):

|

1

|

gcloud container clusters get-credentials terrax |

Regardless of whether you’ve used GCP or the SDK, you should be able to see your Kubernetes Cluster on Google Cloud Platform now:

To get this cluster information via the SDK use:

|

1

|

gcloud container clusters list |

By now the cluster should have been added to the current context of kubectl. You can check this with the following command:

|

1

|

kubectl config current-context |

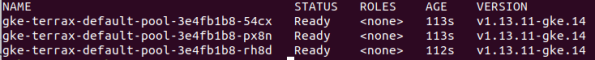

You should also be able to check the nodes of the cluster:

|

1

|

kubectl get nodes |

If you created more clusters, you can get an overview of the whole configuration by issuing:

|

1

|

kubectl config view |

For more information on getting your kubectl connected to one of your clusters, you can check this documentation.

If you ever feel the need to delete a cluster with the help of the SDK, you can issue the following statement (note that the deletion can take a few minutes):

|

1

|

gcloud container clusters delete terrax |

Terrax Application Kubernetes Setup

We’re going to create a setup resembling the one we built in our previous blog with docker compose.

We have a LoadBalancer up front serving our Spring Boot REST api. The Spring Boot app is running as part of a Deployment, so pods can be scaled, and is talking to a mysql database via a plain Service. The database is running as a pod as part of a StatefulSet and uses a PersistentVolumeClaim for storage. The mysql-secrets Secret is used to store the mysql database password needed by the Spring Boot service.

The whole setup is split into two YAML descriptors, one for the database layer (database/database.yml) and one for the service layer (service/service.yml). The final code for these descriptors can be found at GitHub.

Database layer

Descriptor file

The database/database.yml file takes care of the stateful database layer of the application:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

|

---apiVersion: v1kind: PersistentVolumeClaimmetadata: name: mysql-data-disk namespace: tb-demospec: accessModes: - ReadWriteOnce resources: requests: storage: 25Mi---apiVersion: apps/v1kind: StatefulSetmetadata: name: mysql-stateful-set namespace: tb-demo labels: app: databasespec: replicas: 1 serviceName: mysql-service selector: matchLabels: app: database template: metadata: labels: app: database spec: containers: - name: mysql image: rphgoossens/tb-mysql-docker:tb-docker-2.0 ports: - name: mysql containerPort: 3306 volumeMounts: - mountPath: "/var/lib/mysql" subPath: "mysql" name: mysql-data volumes: - name: mysql-data persistentVolumeClaim: claimName: mysql-data-disk---apiVersion: v1kind: Servicemetadata: name: mysql-service namespace: tb-demospec: selector: app: database ports: - protocol: TCP port: 3306 targetPort: mysql |

The descriptor file should be relatively self-explanatory, the setup is mostly based on the information found on this page with the following changes:

- The PersistentVolumeClaim gets fulfilled by a persistent volume that is dynamically provisioned by GKE, for more information check this documentation;

- A StatefulSet is the recommended controller for running stateful pods. A StatefulSet always requires a serviceName (as opposed to a Deployment controller). The serviceName points to the mysql-service service declared at the bottom;

- Instead of the default mysql image I’m using the rphgoossens/tb-mysql-docker:tb-docker-2.0 image I’ve been using in the past couple of blogs. This image differs from the default mysql image in that it comes with environment variables preset and with a startup script that creates the Beer and Brewery tables.

Notice that all components reside in the tb-demo namespace. It’s always a good practice to group related services in a separate namespace. Note that the namespace has to be created first (you could also put the definition in a yaml descriptor of course):

|

1

|

kubectl create namespace tb-demo |

To keep things simple we’re going for a database layer with only one pod. More information for running a stateful single-instance mysql database on kubernetes can be found in this documentation.

Applying the descriptor

Let’s apply the database.yml descriptor:

|

1

|

kubectl apply -f database/database.yml |

You should see the following output:

|

1

2

3

|

persistentvolumeclaim/mysql-data-disk createdstatefulset.apps/mysql-stateful-set createdservice/mysql-service created |

To prevent us from having to type –namespace=tb-demo after every kubectl command, we can set the tb-demo namespace in the current context (more information on namespaces can be found here):

|

1

|

kubectl config set-context --current --namespace=tb-demo |

Now you can check the pods in the tb-demo namespace and you should see one stateful pod running.

|

1

|

kubectl get pods |

![]()

Now, how to check if the database pod has been setup correctly? The easiest way is to proxy the pod using port forwarding. You can either use the pod or the service exposing the pod for that.

For the pod, issue:

|

1

|

kubectl port-forward mysql-stateful-set-0 3306:3306 |

For the service, issue (notice the service/ prefix):

|

1

|

kubectl port-forward service/mysql-service 3306:3306 |

Now using a database client like IntelliJ you should be able to connect and view two empty tables in the terrax_db database by using the jdbc url jdbc:mysql://127.0.0.1:3306/db_terrax, with username/password tb_admin/tb_admin.

So, our database pod is running nicely. Let’s get our service layer going so we can actually put some data in the database.

Service layer

Descriptor file

The service/service.yml file takes care of the stateless service layer of the application

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

|

---apiVersion: v1kind: Secretmetadata: name: mysql-secrets namespace: tb-demotype: Opaquedata: DB_PASSWORD: dGJfYWRtaW4=---apiVersion: apps/v1kind: Deploymentmetadata: name: springboot-deployment namespace: tb-demo labels: app: servicespec: replicas: 2 selector: matchLabels: app: service template: metadata: labels: app: service spec: containers: - name: springboot-app image: rphgoossens/tb-springboot-docker:tb-docker-2.0 resources: limits: memory: "512Mi" cpu: "200m" ports: - name: springboot containerPort: 8090 env: - name: SERVER_PORT value: "8090" - name: DB_USERNAME value: tb_admin - name: DB_PASSWORD valueFrom: secretKeyRef: name: mysql-secrets key: DB_PASSWORD - name: DB_URL---apiVersion: v1kind: Servicemetadata: name: springboot-service namespace: tb-demospec: type: LoadBalancer selector: app: service ports: - name: http port: 80 targetPort: springboot |

Again this descriptor file should be pretty self-explanatory. A lot of inspiration was taken from this article. Some pointers here:

- Again this service layer is running in the tb-demo namespace;

- Currently 2 Spring Boot pods are being spawned. We’re going to play a bit with this number later on;

- Since we’re dealing with a stateless layer this time, our layer of abstraction is a Deployment, not a StatefulSet. For more information on Deployments check this documentation;

- We’re using the same SpringBoot image we’ve used in our previous blog posts;

- Notice that the cpu and memory settings are quite high. If you lower the cpu setting the startup time will increase correspondingly. As for the memory setting: a lower setting can lead to a crash of the pod on startup.

- The image relies on some environment variables to connect to the database layer (for more information on environment variables, check this documentation). The password has been put in a Secret. Note that the DB_PASSWORD value is nothing more than the literal tb_admin in BASE64 encoding. Also note that the jdbc url is pointing to the service (mysql-service) exposing the database pod, not the pod itself;

- We’re using a LoadBalancer this time to expose the Spring Boot pods. This will grant us an external IP to connect to. For more information on services check out this documentation.

Applying the descriptor

Let’s apply the service.yml descriptor:

|

1

|

kubectl apply -f service/service.yml |

You should see the following output:

|

1

2

3

|

secret/mysql-secrets createddeployment.apps/springboot-deployment createdservice/springboot-service created |

Let’s check our pods

|

1

|

kubectl get pods |

It could take a while for the pods to properly start (a minute or 2), but eventually you should see three running pods:

If this is not the case (maybe the pods keep restarting), you should check the logging first:

|

1

|

kubectl logs springboot-deployment-6c74d7dc4b-9jv9j |

If all went well, the picture below should resemble the last lines of the logging

For more information about a specific pod, you can use the describe command

|

1

|

kubectl describe pod springboot-deployment-6c74d7dc4b-9jv9j |

This will give you among other things an overview of the environment variables and its values, the port numbers, the image name the pod is based upon and a list of important events that occurred during the lifetime of the pod.

Both describe and get commands can be used for all kinds of Kubernetes objects, like service, secret and deployment to name but a few.

Testing the application

Now we should be able to test our application. For this we need the external IP address of the load balancer service:

|

1

|

kubectl get service springboot-service |

![]()

Now weaponed with the external IP address, we can access the Swagger UI page with which we can POST some content to our database: http://34.90.68.152/swagger-ui.html:

You can check the actuator endpoint to check the pod you have landed on: http://34.90.68.152/actuator/env/HOSTNAME:

|

1

2

3

4

5

6

7

8

9

|

…{ "name": "systemEnvironment", "property": { "value": "springboot-deployment-6c74d7dc4b-9jv9j", "origin": "System Environment Property "HOSTNAME"" }},… |

Playing with pods

Let’s first check our running pods:

|

1

|

kubectl get pods |

Now kill one of the pods

|

1

|

kubectl delete pod springboot-deployment-6c74d7dc4b-bd9pf |

Now if you check the pods again, you’ll see that the pod you just deleted has been replaced by a new pod:

Let’s say we just noticed that our orders are rapidly increasing thanks to a successful campaign and we want to update our replicas to meet these increased demands. Let’s increase them to 3 (I know it’s not much more, but the small cluster I spun up can’t handle more than 3 pods). One way to do it, is to update our yaml descriptor file and apply it again. Another way is via the kubectl scale command:

|

1

|

kubectl scale deployment springboot-deployment --replicas=3 |

Checking our pods again, you should see that a third pod has been spun up:

Scaling down again can be done with the same command.

That’s it for now. We’ve seen that we can scale up and scale down our stateless pods by demand very easily and very quickly. Pretty cool!

Cleanup

Deleting all components is as easy as deleting the changes made by the descriptor files:

|

1

2

|

kubectl delete -f service/service.ymlkubectl delete -f database/database.yml |

Or, as mentioned before, if you want to clean up the entire cluster:

|

1

|

gcloud container clusters delete terrax |

Summary

In this blogpost we’ve taken out first steps in the world of Kubernetes. We’ve provisioned our first Kubernetes cluster on the Google Cloud Platform using the Google Kubernetes Engine.

We made extensive use of the kubectl CLI to interact with our Kubernetes cluster. We’ve actually only touched upon a small part of the kubectl commands. For a good overview of kubectl commands check out this cheat sheet.

We’ve setup a stateful REST service on our cluster and saw how easy it was to scale up the stateless service layer.

We’ve kept our stateful database layer as simple as possible and stuck to just one pod. For more information on setting up a replicated stateful layer, check out this documentation.

The current setup could still be improved upon. We could expand it with health and readiness probes, use a ConfigMap for the other environment variables, using the Kubernetes API, or even start exploring Kubernetes on other Cloud platforms. So much to discover still!

Let’s see where the next blog post in the series will bring us. Till then: stay calm and have a beer!!

References

- https://github.com/rphgoossens/tb-app-gke

- https://rphgoossens.wordpress.com/2019/11/19/on-beer-composition-orchestrating-our-containers-with-docker-compose/

- https://cloud.google.com/sdk/docs/quickstart-debian-ubuntu

- https://console.cloud.google.com/apis

- https://kubernetes.io/docs/tasks/tools/install-kubectl/

- https://cloud.google.com/kubernetes-engine/docs/how-to/cluster-access-for-kubectl

- https://cloud.google.com/kubernetes-engine/docs/concepts/persistent-volumes

- https://www.serverlab.ca/tutorials/containers/kubernetes/how-to-deploy-mysql-server-5-7-to-kubernetes/

- https://kubernetes.io/docs/tasks/run-application/run-single-instance-stateful-application/

- https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/

- https://www.callicoder.com/deploy-spring-mysql-react-nginx-kubernetes-persistent-volume-secret/

- https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

- https://kubernetes.io/docs/concepts/services-networking/connect-applications-service/

- https://kubernetes.io/docs/tasks/inject-data-application/define-environment-variable-container/

- https://kubernetes.io/docs/reference/kubectl/cheatsheet/

- https://kubernetes.io/docs/tasks/run-application/run-replicated-stateful-application/